Adapting UX research for neurodiversity and cognitive disabilities

Digital exclusion isn’t always obvious. For those with cognitive accessibility needs, the barriers can show up in seemingly small ways. Elements like multi-step instructions, unclear navigation, visual distractions, and even unclear icons can make everyday digital tasks feel harder than they should (or, worse, impossible to complete). And because cognitive disabilities are often non-apparent and misunderstood, usability issues affecting these groups can be overlooked in the product research and design processes.

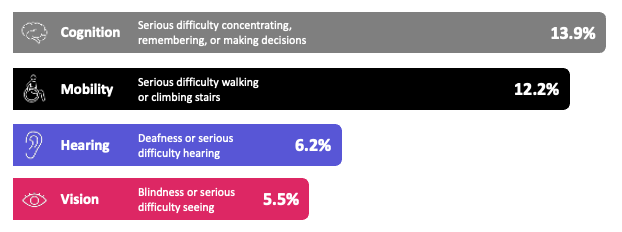

This leaves out critical usability perspectives. According to the CDC, more than 1 in 4 adults in the United States have some type of disability and cognitive is the most prevalent form, affecting nearly 14%.

For UX researchers, this presents both a challenge and an opportunity. Recruiting cognitive testers isn’t always straightforward. Their disabilities may not be visible, they may not identify with a medical label, or they may even hesitate to disclose. Standard research methods also aren’t always designed with this group in mind. But when their perspectives are included, the payoff is building more inclusive digital experiences for all.

What are examples of cognitive disabilities?

Cognitive disabilities affect how people process, remember, and use information. Examples include dyslexia, ADHD, and Alzheimer’s disease. They are related to neurodivergent conditions, but they are not the same. A person can be either neurodivergent or cognitively disabled, or both.

A needs-based definition

It’s important not to focus solely on medical labels when it comes to cognitive disability, as this can exclude people. For example, someone without a formal diagnosis can still experience challenges with memory, attention, or reading and writing that impact how they use digital products.

A needs-based approach to cognitive accessibility focuses on exactly what it says: the needs of individuals. For example, if a person has difficulty remembering and recalling information, many digital experiences will be challenging, or even impossible, to complete.

An aging population faces a higher risk

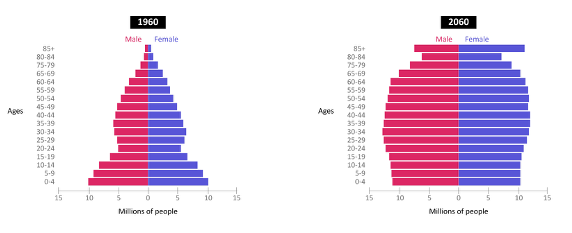

Cognitive disabilities can also be present from birth or acquired over time. This is an important distinction when you consider the rapidly aging population. According to the U.S. Census Bureau, by 2060 those aged 65+ will make up a significant share of the total population, doubling in size from 49 million in 2016 to 95 million people in 2060.

Acquired disabilities go beyond well-known conditions like Alzheimer’s and dementia. For example, many women experience brain fog during perimenopause, because of fluctuating estrogen levels and disrupted sleep.

The average age of perimenopause onset is around age 45, and symptoms can last 4 to 10 years. This means a significant share of midlife women are navigating cognitive symptoms that affect daily life. And for most people today, digital experiences are inseparable from daily life.

How does poor UX impact people with cognitive disabilities?

People with cognitive disabilities face barriers when it comes to memory, focus, reading, learning new systems, and processing or navigating digital interfaces.

According to the W3C Working Group Note 29 April 2021, “Design, structure, and language choices can make content inaccessible to people with cognitive and learning disabilities.” Here are some ways this can manifest in digital experiences:

Memory barriers

People with impaired short-term memory may struggle to remember passwords or re-enter one-time access codes. What seems like a small friction point can end up locking them out of banking apps, healthcare portals, or essential work tools.

Reading and writing barriers

People with impairments that affect learning can find dense text blocks or multi-step instructions overwhelming. Without clear, plain-language explanations and visual cues, it’s easy to lose track of what to do next.

Focus barriers

People who have difficulty maintaining focus can be derailed by distractions like auto-playing videos, pop-ups, or moving animations. A single interruption can cause them to abandon a task altogether.

Do compliance standards improve accessibility for cognitive disabilities?

Accessibility regulations typically point to WCAG. Many of its success criteria (especially newer ones in WCAG 2.2) are designed to reduce cognitive load and help people complete tasks: avoiding repeated data entry, providing clear error messages, making help easy to find, or ensuring buttons and links are large enough to tap.

These requirements matter. But meeting them through automation alone isn’t enough. Tools can’t tell you if language is confusing, steps feel overwhelming, or navigation is predictable. People who have lived experience with cognitive differences are uniquely good at validating those gaps, by bringing real-world usability to what might otherwise be just a compliance exercise.

Testing with these users reduces barriers for everyone, but it also strengthens today’s compliance posture and helps future-proof your product.

Why people with cognitive accessibility needs are often left out of UX research

People with cognitive accessibility needs are still less likely to take part in inclusive design efforts. There are several reasons for this.

Identification challenges

Their disabilities may not be apparent, they may choose not to disclose them, or they may not identify with a medical definition.

Misunderstood

Cognitive differences present in unique ways and are sometimes perceived as “not a real disability.”

Difficult to engage

Recruitment can be challenging, and researchers may fear saying or doing the wrong thing.

Lack of accommodations

Many standard research processes simply aren’t designed with cognitive testers in mind.

“I did one (Fable) engagement where the company was creating a persona of a neurodivergent person, and they wanted to know what they had missed. When I got out of that meeting I felt like I was 100 feet tall because I was able to contribute and find things they hadn’t thought about.”

Donna B.

Fable Community member

Pilot study insights: tailoring UX research methodologies for cognitive testers

To better address these challenges, we conducted a pilot study that explored how to adapt UX research methodologies for people with cognitive accessibility needs.

5 UX research method adaptations to implement now

We identified five areas where small but meaningful changes can make research more effective and inclusive for cognitive testers.

| Practical adaptation | Supporting pilot findings | How to action |

|---|---|---|

| #1 Avoid hypothetical questions |

Hypothetical instructions confused participants when we referenced a task they hadn’t completed (e.g., “Let’s say you have already watched this video…”). | Use concrete language and real examples to help cognitive participants stay grounded in the task at hand. |

| #2 Build rapport and be clear about purpose |

Engaging in casual conversation at the beginning of the session helped to foster a safe space, and participants seemed more at ease to share feedback. Stating that the study is “not a test of your ability, but to gather feedback” helped participants focus on the tasks rather than performing them “correctly.” | Take time to build trust before starting tasks, and clarify that the goal is to test the product, not the participant. |

| #3 Prompt frequently to keep cognitive participants on task |

Some pilot study participants strayed from the intended tasks, even when given clear instructions. We settled on leading the participant to the starting point for each task. | Use frequent, gentle prompts to help participants stay focused and reduce frustration. |

| #4 Prioritize moderated sessions for best results |

Pilot participants had more challenges completing tasks and sharing feedback in unmoderated sessions than in moderated sessions. | When possible, choose moderated sessions when working with testers with cognitive disabilities. |

| #5 Adapt talk aloud protocols to offer a do-first buffer |

Our research suggested that a cognitive audience might not be able to follow a talk aloud protocol. Some participants needed to silently complete tasks first and then share their feedback. |

Give participants the option to complete a task silently before asking them to explain their thought process. |

5 UX research method adaptations to implement now

| Practical adaptation | Supporting pilot findings | How to action |

|---|---|---|

|

#1 Avoid hypothetical questions |

Hypothetical instructions confused participants when we referenced a task they hadn’t completed (e.g., “Let’s say you have already watched this video…”). | Use concrete language and real examples to help cognitive participants stay grounded in the task at hand. |

|

#2 Build rapport and be clear about purpose |

Engaging in casual conversation at the beginning of the session helped to foster a safe space, and participants seemed more at ease to share feedback. Stating that the study is “not a test of your ability, but to gather feedback” helped participants focus on the tasks rather than performing them “correctly.” | Take time to build trust before starting tasks, and clarify that the goal is to test the product, not the participant. |

|

#3 Prompt frequently to keep cognitive participants on task |

Some pilot study participants strayed from the intended tasks, even when given clear instructions. We settled on leading the participant to the starting point for each task since the task evaluation didn’t include exploring the ease of locating the starting point. | Use frequent, gentle prompts to help participants stay focused and reduce frustration. |

|

#4 Prioritize moderated sessions for best results |

Pilot participants had more challenges completing tasks and sharing feedback in unmoderated sessions than in moderated sessions. We continue to iterate on methods of performing unmoderated research with this audience group outside of the pilot study. | When possible, choose moderated sessions when working with testers with cognitive disabilities. A facilitator can remove distractions, reduce confusion, and make space for participants to share richer feedback. |

|

#5 Adapt talk aloud protocols to offer a do-first buffer |

Our research suggested that a cognitive audience might not be able to follow a talk aloud protocol (i.e., sharing their thoughts and feelings while they are completing tasks). We did find that some participants needed to silently complete tasks first and then share their feedback. |

Give participants the option to complete a task silently before asking them to explain their thought process. This small adjustment reduces pressure while capturing more authentic task behavior. |

Making UX research methods work for cognitive testers

The most prevalent disability is also the least visible. Bringing people with cognitive accessibility needs into your research is critical to drive widespread digital product usability. It’s easier than you think to get started.

First, commit to including an appropriate number of people with neurodivergence and cognitive disabilities in your participant mix. (Remember: cognitive represents around 14% of all disabilities.)

Then, use our free Cognitive Accessibility Engagement Guide to make practical adaptations to your UX research methodologies. Here’s what’s inside:

- Best practices and what to avoid

- Steps to take before and during your session

- How to approach building your discussion guide

- Two downloadable discussion guide templates: Information Interview and Evaluative Usability.

If you aren’t sure how to start recruiting cognitive accessibility testers, Fable can help. Reach out to learn more.

Frequently asked questions

What’s the difference between neurodiversity and cognitive disabilities?

The term “neurodivergent” describes natural differences in how people think and process information. Cognitive disabilities are a disability category describing challenges with cognitive functions, including memory, learning, attention, problem solving, language or executive functioning. Some people are both neurodivergent and have cognitive disabilities, but the two groups are not the same. For example, a person with dementia has a cognitive disability but wouldn’t be described as neurodivergent, while a person with autism spectrum disorder might be both, depending on the context.

What are examples of cognitive disabilities?

These disabilities include conditions that affect memory, attention, learning, and problem-solving. Examples include executive function disorder, short-term memory impairments, multiple sclerosis, traumatic brain injury (TBI), and dementia. These disabilities can make digital tasks like reading, remembering steps, or processing instructions more difficult.

Why are cognitive disabilities often called “invisible”?

They’re labeled invisible because there are usually no physical indicators that signal someone’s needs. Challenges with memory, attention, processing, or learning aren’t outwardly visible the way some physical disabilities may be. As a result, cognitive accessibility needs can be harder to identify, and people may not receive the same immediate accommodations that someone using a wheelchair or a white cane might, unless they choose to disclose their needs.

How common are cognitive disabilities?

Cognitive disabilities are widespread. According to the CDC, around 14% of U.S. adults “have a cognition disability with serious difficulty concentrating, remembering, or making decisions.” The need for cognitive accessibility accommodations also rises with the aging population. According to the Alzheimer’s Association, approximately 12% to 18% of people age 60 or older are living with mild cognitive impairment. An estimated 10% to 15% of individuals living with MCI develop dementia each year. About one-third of people living with MCI due to Alzheimer’s disease develop dementia within five years.

How does poor UX impact people with cognitive disabilities?

Poor UX disproportionately impacts people with cognitive disabilities because elements like overwhelming forms, unclear instructions, distracting pop-ups, and confusing navigation create significant cognitive load. These barriers can lead to frustration, mistakes, and even task abandonment.

Importantly, many of the challenges this group faces stem from basic usability issues — not niche or specialized needs. When teams fix these barriers, the improvements don’t just support people with cognitive disabilities; they make the experience clearer, more intuitive, and more efficient for all users.

How can UX researchers adapt methods for cognitive testers?

UX researchers can adapt their methods for cognitive testers by prioritizing comfort and making it easier for users to share feedback. This includes avoiding hypothetical questions, focusing on concrete tasks, building rapport, providing check-ins, and adjusting think-aloud protocols to reduce cognitive load.

Methods designed for cognitive accessibility — such as Fable’s adapted AUS — also help accurately capture a participant’s usability perspective. Together, these practices create a supportive environment where participants can share feedback in ways that feel natural and manageable.